Diving into AI: A series of 3 episodes

Hi, I'm Kevin Séjourné, PhD in computer science and senior R&D engineer at Cloud Temple. As you can imagine, I've been writing a lot of code over the last 20 years. As a passionate explorer of LLMs, I've realised that they can now write code for me. So much the better! But as I'm used to relying on scientific observations, I decided to test the quality of their work.

Watch the 3 episodes of my study :

- Episode 1: I automate my work with LLMs

- Episode 2: Using LLM to correct code and rebuild forgotten algorithms

- Episode 3: Completing the initial programme, testing, testing harder

Episode 1: I automate my work with LLMs

LLMs can now write code for me. I'll show you how. Let's start with a simple and common task: rewriting all or part of an application.

Code often has to be rewritten to :

- Addressing obsolescence

- Correcting unsuitable architecture

- Boosting performance

- Changing behaviour

- Switching to another programming language

Where do we start?

We have chosen a small program, only 31 Kb all-in, about 1000 lines, in Python. We're going to address some obsolescence, and delete part of a program that has become useless. Following a change in requirements, this program had two possible tasks to perform instead of one. Gradually, the new task has completely replaced the old one, which should be removed to reduce the attack surface. We're revising the architecture slightly and looking for performance gains and modern dependencies. The venerable request library has had its day.

Rust is a good candidate for rewriting a program Python. Strong typing at compile time is the first advantage of LLM rewriting. It will force early detection of LLM generation problems, forcing it to generate more logically. This draws the LLM's attention to an additional dimension. Furthermore, Rust is a modern language that handles concurrency well, whereas Python is limited by its global interpreter lock (GIL).

We have no-limit access to GPT 4o via the AI portal developed by Cloud Temple. If in doubt about an answer, we can use groq.com with Llama3 70b and Mixtral 8x7b. A local Llama3-8b_q4 running on jan.ai and a RTX is available for confidential parties.

The capabilities of the templates used guide the size of the programme to be converted. Mixtral handles contexts up to 32 Kb, and even if GPT/Gemmini deals with larger contexts, they are exposed to "lost in the middle", they don't necessarily manage to pay attention to the information present in the middle of their context. At the moment, a heavier programme prevents the LLM from focusing his attention on all the parts of the code.

We use VSCode to copy and paste the generated code. This is where we aggregate all the parts of the code.

So what's next?

How do I tell the LLM to convert my code? How do I give it the code to convert?

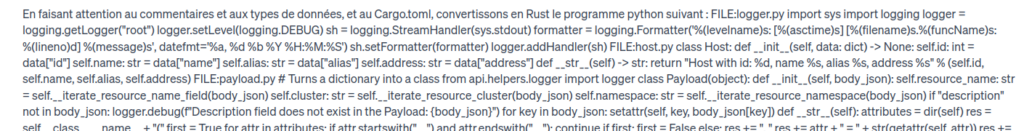

Building a prompt means keeping things simple. And keeping it simple is complicated. Here's the prompt: Paying attention to comments and data types, and to Cargo.toml, let's convert the following Python program into Rust:

[Here comes the concatenation of all the application's code] And yes, you have to dare to copy and paste 31 Kb into the text entry box. But it's the easiest thing to do.

We have carried out previous experiments in which the code is copied in chunks. But there are some tricky questions:

- How big are the pieces? Which file? Function? Class? How many KB? How many lines?

- In what order should the songs be included? Alphabetical order? Topological sorting? Functional sorting? What about cross-dependencies?

Simpler: everything in one go. To do this, we've gone through all the programme files and concatenated them into one big file. In this large file, they are separated by a command of the form FILE main.py :

[followed by the body of the file]

Specifying the original files is essential in Python because the filename is used to resolve dependencies in this language. This also forms useful markers for later to draw the model's attention to a specific part.

The prompt then looks like this :

So when does this code generation start?

All right, here's what happens when we press [enter]:

We can see that GPT4o is unable to generate the full output. The maximum length of the output has been reached. We have obtained some functions from the first files, but there is still a lot of code missing. Furthermore, the code generated by GPT4o is often more verbose than the initial code, in a ratio of 3 to 2. This does not depend specifically on the Python / Rust pair, but is more an artifact of LLM code generation. As with human responses, LLMs seem to have trouble giving short answers.

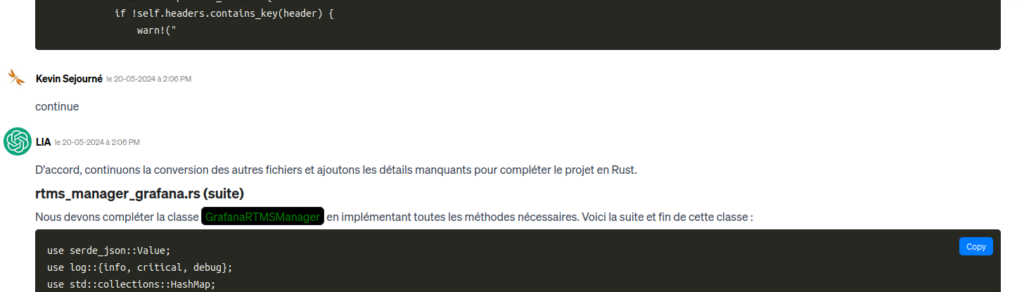

You can simply ask the LLM to continue:

The problem is that it ends up getting lost in what it has already processed and hasn't yet processed... 31 Kb of code + 31 Kb * 1.5 expected code to generate + Markdown decorators, we've already reached 64 Kb of context in the best case scenario. Unfortunately, by asking it to continue without any real reference point, it ended up converting files that had already been processed. The 64 KB slips and ends up exceeding GPT4o's 128 KB window, resulting in a loss of context.

Let's start from the beginning. This time, however, we tell the prompt to convert only the first file. Then, once we've obtained the result, we ask for the second file, and so on. In this way, the LLM keeps the whole program in context for processing references, but only focuses its attention on one transformation at a time.

Little by little, we can copy and paste our entire new program into VSCode. If LLM is verbose, humans need to be concise.

By asking nicely, I also get Cargo.toml, which is the file that manages dependencies and compilation in Rust. It's not a very complicated file, but its main characteristic is that it doesn't exist at all in the original Python program. It's a small addition that requires a summary of the previous program, like the summaries often done by LLMs. It is important to request the generation of this Cargo.toml file after the Rust code files have been generated, otherwise the LLM runs the risk of omitting libraries. At the beginning, the LLM has not yet considered everything it will need.

Miraculously, the copy-and-paste program seems ready to work with the traditional LLM trust that assures us we have the truth. This isn't quite the case, because, among other things, the program doesn't compile. To correct this, we're going to start learning... in a future article.

First stage review

As you will have noticed, with LLM, we spent more time planning what we were going to do and why we were going to do it, and less time actually generating code. At this stage, the time spent on the project is around 8 hours, two of which is spent generating code.